Taking a look at SteamAnalyst’s anti-scraping methods

Prelude

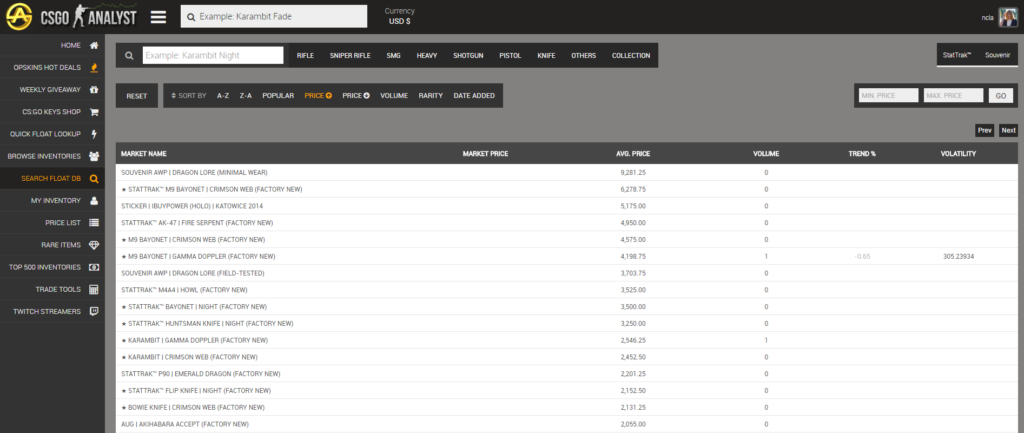

SteamAnalyst is a site that shows market data for virtual items which get traded and sold on Steam platform. It’s primary and only focus at the moment is CS:GO market. Traders use this site to easily check an item’s price or their inventory value, but developers use their API to correctly price items on their commercial projects.

Value

Pricing virtual items that are over 400 USD is quite difficult, because Steam market place only allows to list an item for maximum of 400 dollars, any other expensive items have to be traded through case keys or sold on 3rd party marketplaces.

Even if you decide to set the price for items yourself, you have to put safeguards in place, so that when the prices on market place are being manipulated you aren’t going to be affected. You also have to detect when it happens. The problem becomes even much bigger with more niche and limited supply items. It requires a lot of planning and testing to get it right. You do not want to lose money because you set item prices incorrectly.

That’s where SteamAnalyst makes life easier. They provide easy to use APIwhere they have safe price values listed.

Though it comes at a price of 250 USD per month, and that was when I asked about it two years ago, perhaps it has changed now, but it was certainly worth it’s value back then.

Poor man’s solution

There always exists the possibility of scraping the data, in fact, that’s how SteamAnalyst partially works – by scraping Steam and other market place data too.

But in the case of SteamAnalyst, they have invested time into protecting their data by implementing anti-scraping methods, because surely you wouldn’t want to give your data for a few lines of code.

Multiple road-blocks

I can end the post right here and tell you that the best way to combat any of these anti-scraping methods would be creating a browser based scraper through Selenium, but there is more than meets the eye.

The easiest way to get the average prices for items would be from the ‘Price List’ page. This is the page we are going to target.

Let’s take a look at how the table is fetched:

A quick run down of the code:

- Current page number is stored in a hidden input.

- They use jQuery debounce to rate-limit how fast you can change between pages.

- Some obfuscated, hard-to-read code between lines 8 and 17.

- Request is sent with jQuery’s AJAX, along with Accept-Char header.

If you are a novice it might hard to figure out how to de-obfuscate the code, but in this code those are just escaped string characters, which are parsed into normal strings by the browser when run. There are other obfuscation methods such as JSFuck, but nothing that can’t be resolved by an experienced developer. If you paste that array into the browser console, you will get this:

[“val”, “input[type=”hidden”][name=”tablePage”]”]

And when we look at line 9 now, it basically translates to getting the page number from hidden input, the same input on line 3.

Now that we know the origin of the page number is the same as on line 3 and line 19, and nothing else, we can see that page 34 is entirely skipped. In a nutshell, this is a honeypot. If you send request for page 34, you are most likely to get banned by the server or served false data as a punishment (up to the site owners, though).

HTTP Status Code 429

Another method of detecting a scraper would be if you send requests way too fast. Even if you send requests one by one after each request completes, you could still get detected. The use of Debounce library doesn’t make much sense, apart from protecting the server from unnecessary load or detecting scrapers by having request threshold for a certain time period.

HTTP Status Code 403

Looking back at the code, we see that the request is accompanied by a Accept-Char header. We are just gonna make sure we send that header too.

If you are not quite familiar how HTTP and their headers work and you would like to learn more then check out this article explaining the HTTP protocol.

There are other HTTP request headers servers/sites can check for. Usually sites don’t pay strict attention to them, but sometimes it doesn’t hurt to make sure you are sending requests as if you were a normal user, just in case in the future the site decides to detect simple thing such as a Referer header.

In SteamAnalyst’s case, testing one by one, the following request headers were checked by the server: Referer, Accept-Char and X-Requested-With. If one of those request headers were missing or invalid, the server returned 403 HTTP Status code.

If you were to look at the network panel in developer tools for browser, you could just look at all the request headers and add those through your scraper code. Or if you were a novice, then you could be trying to mimic the request by looking at the JS code.

X-Requested-With request header might catch some people off, as the header isn’t set in the page source code. It’s set by jQuery when sending AJAX requests. As per documentation for AJAX options, headers property:

An object of additional header key/value pairs to send along with requests using the XMLHttpRequest transport. The header X-Requested-With: XMLHttpRequest is always added, but its default XMLHttpRequest value can be changed here. Values in the headers setting can also be overwritten from within the beforeSend function.

Servers can use some request headers to detect a genuine user, but not all.

All this tech mumbo-jumbo, show me some code!

Seems pretty easy with a PHP Curl library, except that’s not where it ends.

Authentication required

Viewing this page requires for you to be logged in through Steam, which adds another roadblock to our goal, especially when the tools you use are fairly limited, in our case we can’t run a browser from PHP and we do not want to manually send requests one by one to log-in through Steam successfully. sigh

So we created a Python script to run a browser to log-in with a fresh Steam account, since any account seems to be fine and they don’t limit content to a Steam level or account age (wink wink site developers). We run that script through PHP’s exec method and the Python script returns a JSON string on success with logged_in cookie, which we then set in the previous scraper code.

The script was working fine, we wrote less code and we could re-use the logged_in cookie in proxied scraping too, because we needed to scrape individual item pages as well. Apparently they were not rate-limiting content based on the logged in user at the time of writing.

Nail in the coffin

The last straw for ditching the PHP scraper and going all Python and Selenium was when they turned on stricter checks for their site through CloudFlare, also known as the ‘I am under attack’ mode, which checks if your browser has JavaScript enabled and other things. Worse case scenario would be if they decide to ask to solve reCAPTCHA.

Of course there are scripts to bypass those checks, in our case we just passed the CloudFlare cookies and browser user-agent to the PHP script from our existing Python script, but if you have server resources available for a browser and you don’t need to scrape it fast as possible, why not simulate a close-to-real user?

These anti-scraping methods are only the tip of the iceberg. I myself found this challenge interesting and rewarding to overcome, and in general I like these sort of anti-bot measures. Bypassing anti-bot methods is what got me into programming in the first place.

Lastly it’s up to the individual on whether or not scraping content that you are supposed to be paying for is rational. In our case, we didn’t use it for anything commercial, only for internal use, so we can know if we are on the right track creating our own pricing. There are cheaper competitor alternatives available such as Steamlytics if you need API for item prices and if you don’t feel scraping is the right thing to do.

Related Posts

January 30, 2019

PHP Guzzle 6 – accessing initial request data from a Guzzle Response object

One of common tasks I often do with web…